In our article Creating Your Own ChatGPT: A Step-by-Step Guide Using GPT-4 in Python, We talked about how you can create your own ChatGPT. If you have followed the article you will have your very own ChatGPT with you. There was only one problem in our chatbot which we are going to address in this article.

Our ChatGPT is not able to remember our previous conversations unlike how actual ChatGPT does. It fails to remember the context in which we are talking. Checkout the image for better understanding.

We can clearly see for the text “are they all friends“, it is not able to remember our previous conversation and thus not able to answer accordingly. In the last question I asked the ChatGPT whether you remember what we were talking about or not and ChatGPT replies.

As an AI, I do not have the ability to recall previous parts of a conversation if they are not provided in your query. If you provide more context or information, I’ll be happy to help you with your questions or concerns about certain people.

Take a look at how ChatGPT is remembering our conversation and answering accordingly.

For the question “are they all friends“, Official ChatGPT picked context from our previous conversation and answered accordingly.

This is what we are going to do with our ChatGPT bot. To give our ChatGPT the power to remember previous conversation and answer accordingly.

Prerequisites

We need few things to make our bot remember previous chats.

- Boilerplate code for our ChatGPT bot

- OpenAI API key, which you can get by creating account on OpenAI official site

Coding Section

Our chatgpt.py currently looks like this

import openai

from fastapi import FastAPI, Request

from fastapi.responses import HTMLResponse

from fastapi.staticfiles import StaticFiles

from fastapi.templating import Jinja2Templates

from pydantic import BaseModel

import os

app = FastAPI()

openai.api_key = os.environ.get("IMPORT_YOUR_OPENAI_API_KEY_HERE")

# Used for adding css/Js in the project

# app.mount("/static", StaticFiles(directory="static"), name="static")

templates = Jinja2Templates(directory="templates")

class UserQuery(BaseModel):

user_input: str

def create_prompt(user_input):

prompt = f"I am an AI language model or you can call me Popoye the sailor man, and I'm here to help you. You can sometimes add popoye character in your answers. You asked: \"{user_input}\". My response is:"

return prompt

def generate_response(user_input):

prompt = create_prompt(user_input)

response = openai.ChatCompletion.create(

model="gpt-4",

messages=[

{"role": "system", "content": prompt}

]

)

message = response.choices[0].message.content

return message

@app.get("/", response_class=HTMLResponse)

async def chatgpt_ui(request: Request):

return templates.TemplateResponse("index.html", {"request": request})

@app.post("/chatgpt/")

async def chatgpt_endpoint(user_query: UserQuery):

response = generate_response(user_query.user_input)

return {"response": response}

Code language: Python (python)Required changes to achieve our goal

- Update prompt to contain our previous conversation as well.

- Feed updated prompt to the ChatGPT API

Replace your create_prompt method with the following code

def create_prompt(user_input):

prompt.append({"role":"user","content":user_input})

return prompt

Code language: Python (python)Also define a list with name prompt after all the imports and add this in it

# Created base prompt to enact like popoye

prompt = [{"role": "system", "content": "I am an AI language model or you can call me Popoye the sailor man, and I'm here to help you. You can sometimes add popoye character in your answers."}]

Code language: Python (python)First required change is done and now we need to modify our generate_response method. Replace your code with this updated code.

def generate_response(user_input):

prompt = create_prompt(user_input)

response = openai.ChatCompletion.create(

model="gpt-4",

messages=prompt

)

message = response.choices[0].message.content

# Updating prompt with GPT-4 response as well

prompt.append({"role": "system", "content": message})

return message

Code language: Python (python)We are feeding our ChatGPT bot the whole conversation . By doing this we are helping ChatGPT to remember the context. The response that we received from the API is also added to our prompt. For better understanding check the changes via diagram.

All the required changes are done now and if you run your bot and ask the same questions we asked in the beginning, It will answer them by checking our previous conversations. To do that start your server by typing uvicorn chatgpt:app in your terminal and check on your browser.

As you can clearly see it is now remembering our conversation and answering accordingly. That’s it folks, We have finished what we started. Our ChatGPT bot is now remembering our conversation.

Bonus Tip:

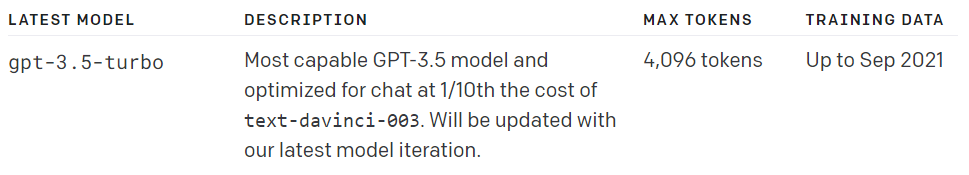

OpenAI different model has different limitations to what they can take as an input. For example

All the available model has certain limitations on how much they can consume at once or process at once. To solve this problem we can do the following

- Keep a check on how much tokens we have consumed after every API call and if the tokens crosses our threshold value then we reduce the size of our conversation prompt. We can do this by removing earlier conversations from the list. You get all the details in your response from the API

- By using Libraries like Llama Index, Which we are going to do in the next article.

Updated code has been added to GITHUB Repo. Feel free to give a ⭐