In this article, We’ll have a look at how we can create a RASA NLU model using training data. We’ll also learn how we can load NLU models into a python framework server and use it to predict the intent of a query.

First, we need to understand some basic terms and requirements before diving deeper into the article.

- RASA – Rasa is an AI/ML-based framework used to create a chatbot model. For deeper understanding refer to this article Rasa for beginners

- NLU – Natural Language Understanding is a branch of AI that helps the machine to understand the text and respond accordingly. It is widely used in creating Chatbots/Voicebots.

- FastAPI and Uvicorn – FastAPI is a python framework that is used to create HTTP-based server to serve APIs and much more. To learn more refer to this article CRUD application in FastAPI

All the requirements have been listed so I will not go into some areas such as creating a python environment or installing Rasa as they have been described very well in the linked article.

Problem Statement

Solution

To work on the solution we need to keep a few things in mind

Rasa requires nlu.yml and config.yml to train a NLU model. Since we are going to make our nlu.yml file using the JSON data, we only need config.yml present in our code directory. The config.yml for me looks like this, You can modify it as per your need

# pipeline:

# # No configuration for the NLU pipeline was provided. The following default pipeline was used to train your model.

# # If you'd like to customize it, uncomment and adjust the pipeline.

# # See https://rasa.com/docs/rasa/tuning-your-model for more information.

language: en

pipeline:

- name: WhitespaceTokenizer

- name: CountVectorsFeaturizer

- name: CountVectorsFeaturizer

analyzer: "char_wb"

min_ngram: 1

max_ngram: 4

- name: DIETClassifier

epochs: 120

entity_recognition: False

- name: ResponseSelector

epochs: 100

- name: FallbackClassifier

threshold: 0.85

policies:

- name: TEDPolicy

max_history: 10

epochs: 20

- name: AugmentedMemoizationPolicy

max_history: 6

- name: RulePolicy

Code language: Python (python)API Endpoints

First we will have /trainNLUModel that will perform the following tasks

- It’ll convert JSON data into yml based data that we require for our nlu.yml .

- Train a NLU model using newly created

nlu.yml. - Load the trained model in memory in the runtime, So that it becomes ready to use on the go without restarting the services.

The code snippet for this API looks like this

@app.post("/trainNLUModel")

async def train_nlu_model(data: NLURequest):

# saving NLU model using the modelName

file_name = f'{data.modelName}_nlu.yml'

file = open(file_name, "w")

file.write('version: "3.0" \nnlu:\n')

# Saving training examples along with intent

for intent in data.nluData:

file.write("- intent: {intent_name}\n".format(intent_name=intent))

file.write(" examples: |\n")

intent_examples = data.nluData[intent]

for example in intent_examples:

file.write(" - {}\n".format(example))

file.close()

# training NLU model based on the data received

# saving it in trained folder for models

nlu_model = train_nlu('config.yml',file_name,MODEL_SAVING_DIR,fixed_model_name= data.modelName)

# removing the created yml file as it is not required after training

if os.path.exists(file_name):

os.remove(file_name)

# Loading the newly trained NLU model in memory

RasaNLUModel.loadModel(MODEL_SAVING_DIR+'/'+data.modelName, modelName=data.modelName)

return {"message":"Model Loaded into memory successfully"}

Code language: Python (python)Second, /predictText will take user query and process it with the help of NLU model and return the predicted response.

Code snippet for this API looks like this

@app.get("/predictText")

async def read_item(modelName: str, query: str):

agent_nlu = RasaNLUModel._instance_[modelName]

message = await agent_nlu.parse_message(query)

# print(message)

return {"prediction_info": message}

Code language: Python (python)Now moving to Rasa class which will handle all the live models inside a dictionary which will make it available all the time to access and use it.

class RasaNLUModel:

_instance_ = {} # Contains active RASA NLU models

def init():

"""

Loads default rasa model while server being initialised

"""

defaultModelPath = MODEL_SAVING_DIR + '/default.tar.gz'

RasaNLUModel._instance_['default'] = Agent.load(defaultModelPath)

def loadModel(modelpath, modelName):

"""

loads custom Rasa NLU models into server without stopping the active server

"""

RasaNLUModel._instance_[modelName] = Agent.load(modelpath)

Code language: Python (python)Server class will load the default model, If you have any otherwise you can comment the method.

class Server:

@staticmethod

def loadModels():

RasaNLUModel.init()

print("Model loading done...")

Server.loadModels()

Code language: Python (python)Your final code will look something like this, I have named it as main.py

from fastapi import FastAPI

from rasa.core.agent import Agent

from rasa.model_training import train_nlu

from pydantic import BaseModel

from typing import Dict, Optional

import os

app = FastAPI()

MODEL_SAVING_DIR = './Models'

# Request Schema for creating NLU model

class NLURequest(BaseModel):

nluData: Dict

modelName: str

class RasaNLUModel:

_instance_ = {} # Contains active RASA NLU models

def init():

"""

Loads default rasa model while server being initialised

"""

defaultModelPath = MODEL_SAVING_DIR + '/default.tar.gz'

RasaNLUModel._instance_['default'] = Agent.load(defaultModelPath)

def loadModel(modelpath, modelName):

"""

loads custom Rasa NLU models into server without stopping the active server

"""

RasaNLUModel._instance_[modelName] = Agent.load(modelpath)

@app.post("/trainNLUModel")

async def train_nlu_model(data: NLURequest):

# saving custom nlu data with clientId as for training we need file to be present

file_name = f'{data.modelName}_nlu.yml'

file = open(file_name, "w")

file.write('version: "3.0" \nnlu:\n')

# Saving training examples along with intent

for intent in data.nluData:

file.write("- intent: {intent_name}\n".format(intent_name=intent))

file.write(" examples: |\n")

intent_examples = data.nluData[intent]

for example in intent_examples:

file.write(" - {}\n".format(example))

file.close()

# training NLU model based on the data received from client

# saving it in trained folder for models

nlu_model = train_nlu('config.yml',file_name,MODEL_SAVING_DIR,fixed_model_name= data.modelName)

# removing the created yml file as it is not required after training

if os.path.exists(file_name):

os.remove(file_name)

RasaNLUModel.loadModel(MODEL_SAVING_DIR+'/'+data.modelName, modelName=data.modelName)

return {"message":"Model Loaded into memory successfully"}

@app.get("/predictText")

async def read_item(modelName: str, query: str):

agent_nlu = RasaNLUModel._instance_[modelName]

message = await agent_nlu.parse_message(query)

# print(message)

return {"prediction_info": message}

class Server:

@staticmethod

def loadModels():

RasaNLUModel.init()

print("Model loading done...")

Server.loadModels()

Code language: Python (python)At this point, my code structure looks like this

Code language: Python (python)RasaNLUProject/ ├── .git/ ├── .gitignore ├── README.md ├── main.py ├── config.yml └── Models/

To run the following code use the following command in python activated environment: uvicorn main:app. This will start your server

Testing the APIs

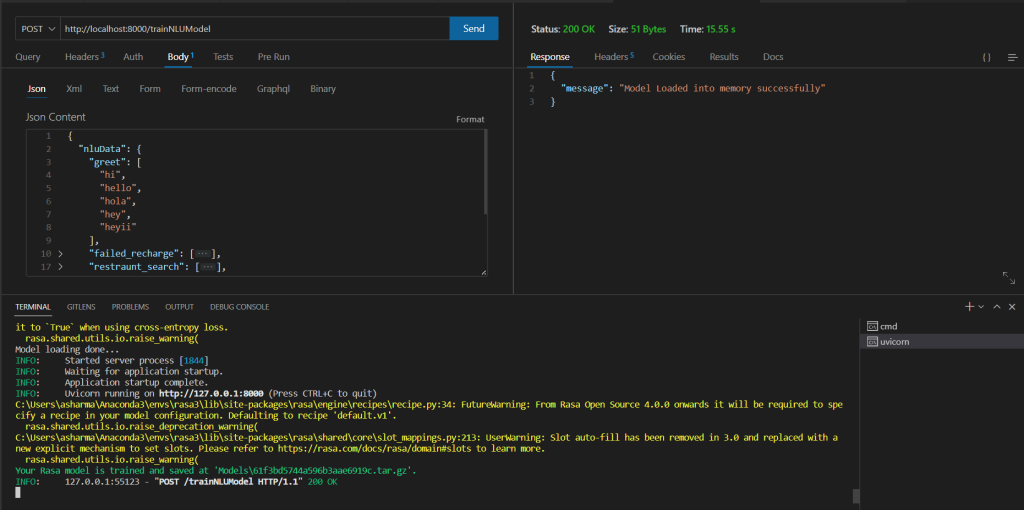

To train a model, We are going to use the following data which contains intent_name and a few training examples for each intent_name and a modelName through which we are going to identify the model for predicting the text. The data looks like this

{

"nluData": {

"greet": [

"hi",

"hello",

"hola",

"hey",

"heyii"

],

"failed_recharge": [

"recharge failed during transaction",

"money deducted but not recharged",

"recharge not reflecting in account",

"transaction failed, what should i do",

"my money is lost, help me"

],

"restraunt_search": [

"i'm looking for a place to eat",

"i'm looking for a place in the north of town",

"show me chinese restaurants",

"show me a mexican place in the centre",

"i am looking for an indian spot",

"search for restaurants",

"anywhere in the west",

"central indian restaurant"

],

"affirm": [

"indeed",

"okay",

"ok",

"confirmed",

"correct",

"that's right"

]

},

"modelName": "61f3bd5744a596b3aae6919c"

}

Code language: Python (python)Use POSTMAN or ThunderClient in VSCode to make a HTTP request to our server. This is how it looks for me when I make an API request to train the model

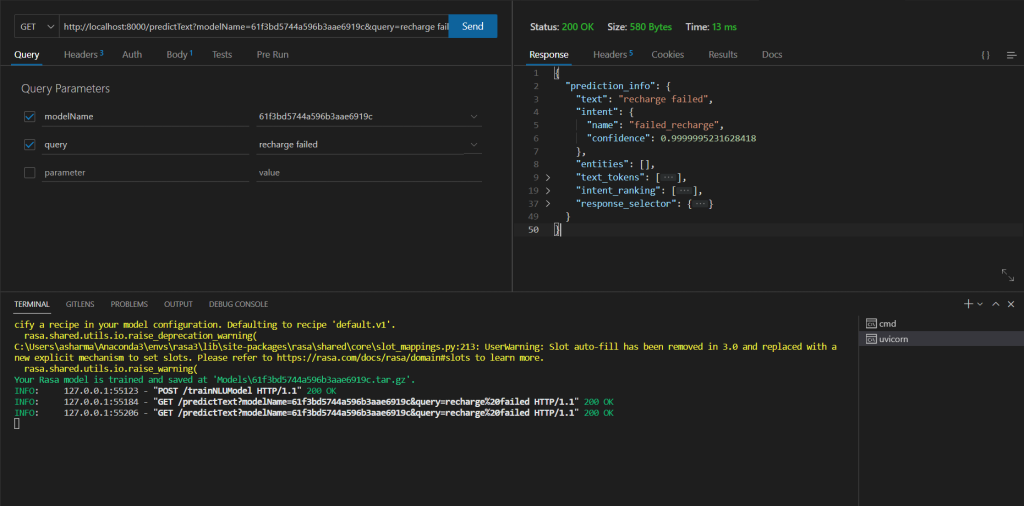

Now to check whether our model has been loaded and is active or not we hit the second API which is /predictText and it looks something like this

For better understanding of the output from our code, Here is the text version

{

"prediction_info": {

"text": "recharge failed",

"intent": {

"name": "failed_recharge",

"confidence": 0.9999995231628418

},

"entities": [],

"text_tokens": [

[

0,

8

],

[

9,

15

]

],

"intent_ranking": [

{

"name": "failed_recharge",

"confidence": 0.9999995231628418

},

{

"name": "affirm",

"confidence": 4.1333024114464934e-07

},

{

"name": "greet",

"confidence": 5.004184799872746e-08

},

{

"name": "restraunt_search",

"confidence": 4.142692588970931e-08

}

],

"response_selector": {

"all_retrieval_intents": [],

"default": {

"response": {

"responses": null,

"confidence": 0.0,

"intent_response_key": null,

"utter_action": "utter_None"

},

"ranking": []

}

}

}

}

Code language: Python (python)Conclusion

Your server is ready to train-deploy-process your query on the go. You can load multiple models and can use any of them by passing the correct Model name. This article helps you to create NLU model using JSON based data and to have an all time ready server to handle newly loaded models.

The NLU model only provides the detected intent based on your query. To return a response based on the detected intent you can create a response dictionary or fetch responses from a DB directly. In the next article, I will train a RASA model ( NLU + CORE ) which will have the capability to return responses itself.

Source code for the same can be found at this GITHUB REPO. Feel free to give a star ⭐